Edited: 2024-09-25, added remoteUser: ${localEnv:USER} to avoid chowning the workspace folder to the root user

Onboarding to a new project can be a wild ride. Sometimes, you just have a codebase that "worked on the dev's machine" and no instructions beyond that. Other times there's a documented series of commands to run on your machine that you're generally expected to just trust to not mess things up. And lately, there's a few tools that are available to make that process more consistent across many projects, and therefore less of a burden on both the maintainers and those being onboarded.

How it used to be

You might be expecting "back in my day" here, but I have to admit that while I'm not as young as I was when I started this blog, I'm not that old either. Instead, I'll say that, traditionally, onboarding onto an active project involved finding and running some set of commands and hoping they worked on your system.

If you ran exactly the same system as the original authors, and worked on similar projects to them in the past, then the commands would likely work... and that's the problem. Very rarely did people start new projects on blank slate machines, instead they would start them on systems that had tools, libraries, and other projects already installed on them. As a result, when someone new came on with a different system environment, they would run into issues like incorrect versions, missing libraries, etc very commonly.

Projects that commonly had newer developers join, whether through popularity, due to commercial success, or seasonality (college courses), would typically solve this problem by building and maintaining robust setup scripts. These scripts would "know" to check for things previous onboardings revealed, such as which Linux distro is running, which versions of software was installed or not installed, and sometimes even the underlying hardware was 32- or 64-bit, and more.

However, all projects no matter their popularity, would constantly get emails (or issues opened) with various error logs from developers trying to use the project on older or newer machines. The popular projects would deal with them and reduce the frequency, while less popular projects would see these issues languish due to the difficulty in asynchronously debugging these sorts of problems. The maintainers don't have access to a machine with these problems, only the reporter does, and if the maintainer asks a question a day later, the likelihood that the reporter ever comes back is very low.

Why it was a problem

Not only is debugging those sorts of problems difficult for open-source projects, but it's still annoying for companies. Every time a new engineer is hired, or comes to you from another team, they have to spend time figuring out how to setup their machine for your project. A monorepo potentially solves that for transfers, but not for wholly new engineers. This can be solved for in well-resourced teams by creating disk images ready to go, but creating and maintaining those is its own skill entirely. I'll have a post coming up next year about sysadmin skills being rare despite being so valuable, and this is one of those skills.

You might be wondering why these problems come up so often, and it's because environments vary so wildly that it's easy to run into issues. Here's an abbreviated list of the sorts of issues that I've personally seen and had to solve:

- Project was written/built for Linux, engineer uses Mac OS X (or, more rarely in my circles, Windows)

- Project scripts assume bash is the default shell, engineer setup zsh or fish

- Project scripts assume certain files are in /etc/, like /etc/lsb-release, but only some Linux distros have that file

- Project binaries can run, but not be compiled, due to missing "dev" libraries, such as

libboost-atomic1.74-deveven thoughlibboost-atomic1.74.0is installed and linkable. - Project A uses gcc-5 and won't compile with gcc-7 or later, while Project B wants to upgrade to gcc-8. Some Linux distros make it easy to install multiple versions of gcc, but you still have to take care to set the right environment variables. Others only allow one version so engineers switching between project constantly have to install/reinstall GCC.

- Same as above, but for npm, node, Python, etc etc and often these tools are only allowed to have one version installed by the OS, so more tools are needed to manage them all.

What changed in the software world

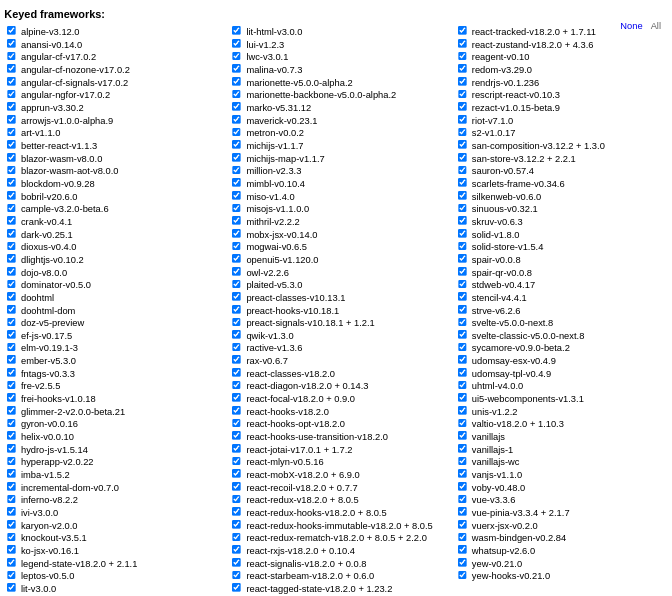

The software ecosystem is constantly evolving, and for proof one can simply look at the number of Javascript HTML manipulation frameworks with benchmarks:

Unrelated to Javascript frameworks, we also got containers. They can be referred to as Docker, LXC, rkt, OCI, podman containers and more, but generally I'll be referring to the concept of network, disk, and process namespacing that allows you to run what looks like a Linux VM inside a Linux host, but without the overhead of a VM.

Containers were initially meant for creating production environments, so that a server deployed to today or two months from now can get to the exact same state as what's running on a developer's machine. Since so much of the work was around the idea of creating lightweight container images with only one service in it, it took a while for the idea of creating a container for developer tooling to become popular.

Containers save the day

Back in 2017, containers were so popular that a whole new cluster orchestration framework based entirely around containers was gaining steam (Kubernetes). However, they weren't yet popular for developer tooling, so as I setup a new project for work I had to decide how we were going to setup developer environments. Luckily, we were basing a lot of what we were doing in the early days based on an open-source project that was an early adopter of developer containers.

I then created, based on that project's scripts, a docker/dev/start.sh, docker/dev/remove.sh, and docker/dev/into.sh. which would create, remove, and launch a shell in a dev container, respectively. There was also a docker/dev/Dockerfile that described how to build the container image, and since we were onboarding engineers relatively often (once a month initially, but eventually multiple a week) I setup a docker/dev/build.sh that would build and upload the container image that the start.sh script would pull and use locally.

We then went for years, updating the Dockerfile as needed to install more tools, newer versions of them, and we even updated the version of the container's OS (Ubuntu 16.04 LTS to 20.04 LTS). All the while, the host machines only needed a few tools installed, namely docker-ce. During my time there, I never updated my desktop OS (Ubuntu 16.04) even though the container was based on a newer OS, and we never had to worry about what was installed or not on the host of any laptop or desktop of any of the engineers.

Today, it's (actually) better

Back in 2017, or even as recent as 2021, there weren't many options beyond creating and maintaining these scripts entirely yourself. This year, as I was looking to setup multiple new projects, I looked into the space again and I found devcontainers, or their domain name containers.dev, which is a spec and a tool that came out of the VS Code team to define everything in those 3 scripts in a more modular way.

Unfortunately, as a result of its birth, it's pretty tied to VS Code and there is a "reference CLI" but it doesn't (yet) implement the stop functionality. It seems the way to benefit the most from the spec is to use it with VS Code or GitHub codespaces, or other cloud providers like gitpod.

However, while researching one last time for this post, I found one tool that actually does what I want. I found them previously, but their docs were confusing and seemed to imply they didn't support what I wanted, but I tried them out anyway and it turns out they always did!

How to replace all those scripts, but not really

Honestly, devpod.sh is pretty good, except their docs seem to be mainly around IDE's, remote hosts, and cloning new projects. Firstly, we need to define a devcontainer.json somewhere/somehow, and then we can use devpod for everything else.

For devcontainer.json, without using a local VS Code (the web version doesn't support the extension), you just have to wade through https://containers.dev/ and figure things out. To help you, I'll paste what I consider a file that you can start "from scratch" with and add relevant features and changes to from there:

{

"name": "fahhem's 'scratch' devcontainer",

"image": "ubuntu:20.04",

"features": {

"ghcr.io/devcontainers/features/docker-outside-of-docker:1": {

"version": "latest",

"enableNonRootDocker": "true",

"moby": "false"

}

},

"remoteEnv": {

"LOCAL_WORKSPACE_FOLDER": "${localWorkspaceFolder}"

},

"remoteUser": "${localEnv:USER}",

}

Oh boy, do I hate json as a human-writable format. Don't worry, plenty of people hate YAML too, but since it's usually good enough, here's the same file in YAML that can be automatically converted to json:

name: "fahhem's 'scratch' devcontainer"

image: ubuntu:20.04

features:

ghcr.io/devcontainers/features/docker-outside-of-docker:1:

version: latest

enableNonRootDocker: "true"

moby: "false"

remoteEnv:

LOCAL_WORKSPACE_FOLDER: ${localWorkspaceFolder}

remoteUser: ${localEnv:USER}

And you can convert that using tools like yq : cat devcontainer.yaml | yq -ojson > devcontainer.json

Anyway, once you have that, you can add/remove features from https://containers.dev/features pretty easily or just grab another template from https://containers.dev/templates

How to actually use that devcontainer.json

I would still create docker/dev/start.sh in the future, but instead of ending up as hundreds of lines, it would mainly be some code to download devpod if it's not installed, then:

devpod up . --devcontainer-path devcontainer.json

And docker/dev/into.sh would simply be: devpod ssh .

And docker/dev/remove.sh would be: devpod stop .

I would still create those scripts since I don't want to rely on scripts or aliases installed on host machines, so the only real "onboarding" steps would be:

git clone ${githost}/${workspacename}

cd ${workspacename}

docker/dev/start.sh

docker/dev/into.sh